Redesigning AI: Improvising With The Dynamics Of Humanitarian Innovation

The constantly shifting paradigms of humanitarian actions, owing to the increased complexity and range of needs, has resulted in a steep demand for innovation in the area. Technological advancements, like Big Data analytics and AI, have proved effective and efficient for humanitarian applications to date. However, like any other innovation, it has also introduced new challenges and risks, making the end-user vulnerable to its repercussions. Redesigning AI seems to be the most plausible solution to accommodate the dynamics of various parameters within the sector.

The world has been in constant strife to meet people’s demands while improving the efficiency in the humanitarian sector since the very beginning. Improvements and innovations in technology have led to the augmented use of artificial intelligence (AI) systems since the past few decades to satiate this hunger. However, the application has always been debated and ignited controversy, owing to its ethical and human rights-related implications.

“The instrumental conception of technology conditions every attempt to bring man into the right relation to technology. Everything depends on our manipulating technology in the proper manner as a means. We will, as we say, “get” technology “spiritually in hand.” We will master it. The will to mastery becomes all the more urgent the more technology threatens to slip from human control. But suppose now that technology was no mere means, how would it stand with the will to master it?”

Heidegger, Martin. “The question concerning technology (W. Lovitt, Trans.) The question concerning technology: and other essays (pp. 3-35).” (Heidegger 1977).

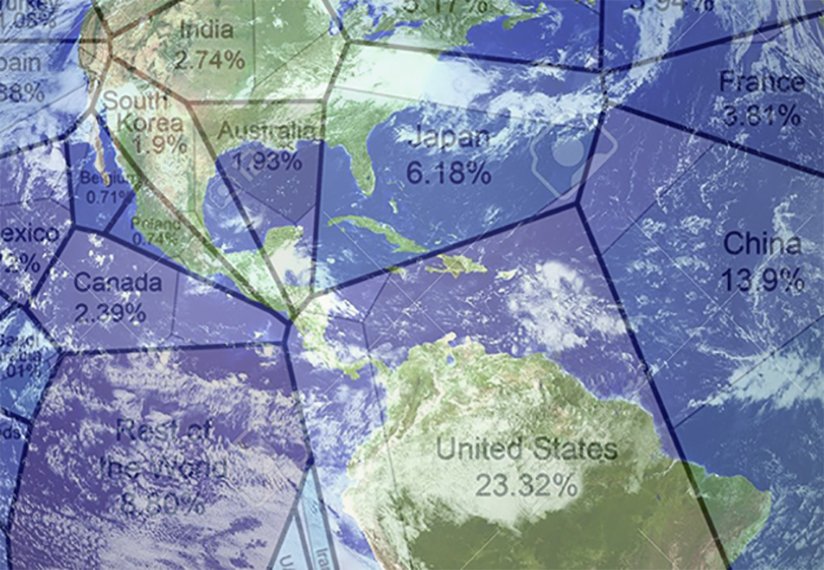

In 2013, the United Nations Office for the Coordination of Humanitarian Affairs officially proposed the recognition of information during crises as a basic humanitarian need. This led to a series of digital transformation and innovation initiatives in the humanitarian sector.

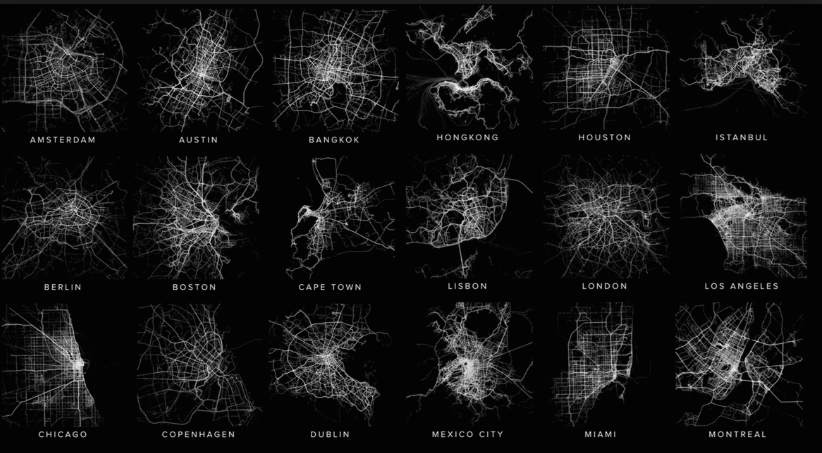

Various AI applications like chatbots, biometrics, satellite imaging, and Big Data analysis became an essential and inevitable part of the wider phenomenon. Critical algorithms were developed to focus primarily on assisting human intelligence for betterment. However, the phenomenon drew greater power asymmetries with time. What began as the “AI for social good” movement gradually started turning to “unethical innovation”.

Chatbots, for instance, has been around for quite a few years now, becoming a prevalent part of our digital culture now. Even though these bots don’t intend to take over the world (like in Transformers or Matrix) or destroy humanity just yet, there have been instances of less-than-professional behaviour shown by them in the past. Microsoft’s Teenage chatbot Tay and its successor Zo, and Nabla (a text generator at the Parisian healthcare facility) are just a few examples of AI going wrong.

Chatbots (and other AI) are based on simple algorithms and are programmed manually. Their actions are explicitly based on the mappings chalked out by the creators. Natural language, on the other hand, is a complex thing with slang, misspellings, humour, and intonations. How can it be expected that interactions involving sarcasm and intonations (that are sometimes even beyond human comprehension) could be interpreted appropriately by a machine? The latest advancements based on humanitarian innovation are the prime focus area for researchers.

Redesigning AI for Humanitarian Innovation

Though there is no specific definition of humanitarian innovation, according to HIF-ALNAP (by Obrecht and Warner, 2016)

“Humanitarian innovation is an iterative process that identifies, adjusts, and diffuses ideas for improving humanitarian action”.

This leads to consolidated learning, bringing a measurable and relative improvement in the effectiveness, efficiency, and quality of the innovative approaches over the conventional ones. Further, it also improves the scale of adoption to improve humanitarian performance. In the end, this all leads to more exploration and research.

Currently, organisations that are involved in humanitarian endeavours collect, store, and share huge amounts of personal information. Challenges, however, arise when this data is mistreated or misused. There are a variety of guidelines for the promotion of ethical and fair use of AI across different sectors. These recommend the designing, development, and deployment of the latest innovation and technology.

Further, AI reduces communication to its barest instrumental forms. This widens the gap between the humanitarian efforts and the society itself. The disconnects compound further when data experiments with untested technology. This actually degrades the value of data. In other words, it is the reproduction of the colonial powers of AI.

Sensitive data, especially from the vulnerable demographics (such as the Internationally Displaced Persons, refugees, mental health patients, and senior citizens), needs to be handled in a sophisticated manner to ensure its privacy and security. AI redesign, therefore, is aimed to broaden and deepen the interdisciplinary efforts to enhance the innovation on humanitarian assistance.

Redefining the Future of Humanity

Though AI is paced to make huge advances to revolutionise medicine, transport, employment, and markets, it is ready to take a leap and reshape the fabric of society (and its citizens). On the other hand, its perils, owing to increased automation and misinformation, are threatening the very society with bias and surveillance.

“Whenever I hear people saying AI is going to hurt people in the future I think, yeah, technology can generally always be used for good and bad and you need to be careful about how you build it … if you’re arguing against AI then you’re arguing against safer cars that aren’t going to have accidents, and you’re arguing against being able to better diagnose people when they’re sick” —Mark Zuckerberg

Redirecting (and arguably redesigning) AI is one step closer to a smoother and seamless work, democracy, and justice for humanitarian efforts in society. However, the question remains- how. Time will reply that soon.

Founder Dinis Guarda

IntelligentHQ Your New Business Network.

IntelligentHQ is a Business network and an expert source for finance, capital markets and intelligence for thousands of global business professionals, startups, and companies.

We exist at the point of intersection between technology, social media, finance and innovation.

IntelligentHQ leverages innovation and scale of social digital technology, analytics, news, and distribution to create an unparalleled, full digital medium and social business networks spectrum.

IntelligentHQ is working hard, to become a trusted, and indispensable source of business news and analytics, within financial services and its associated supply chains and ecosystems