As OpenAI launches a ChatGPT App for Apple’s Vision Pro, other global brands, including Google, Meta, Sony, and many more join the expedition to explore the realms of intersection between Spatial Computing And Artificial Intelligence.

With the launch of ChatGPT App for Apple’s Vision Pro last week, OpenAI opened new doors to merge its GPT-4 Turbo LLM with Apple’s VR tech for a more immersive AI experience.

“I was skeptical [of the Vision Pro] at first. I don’t bow down before the great god of Apple, but I was really, really blown away.” – James Cameron, Filmmaker (via VanityFair)

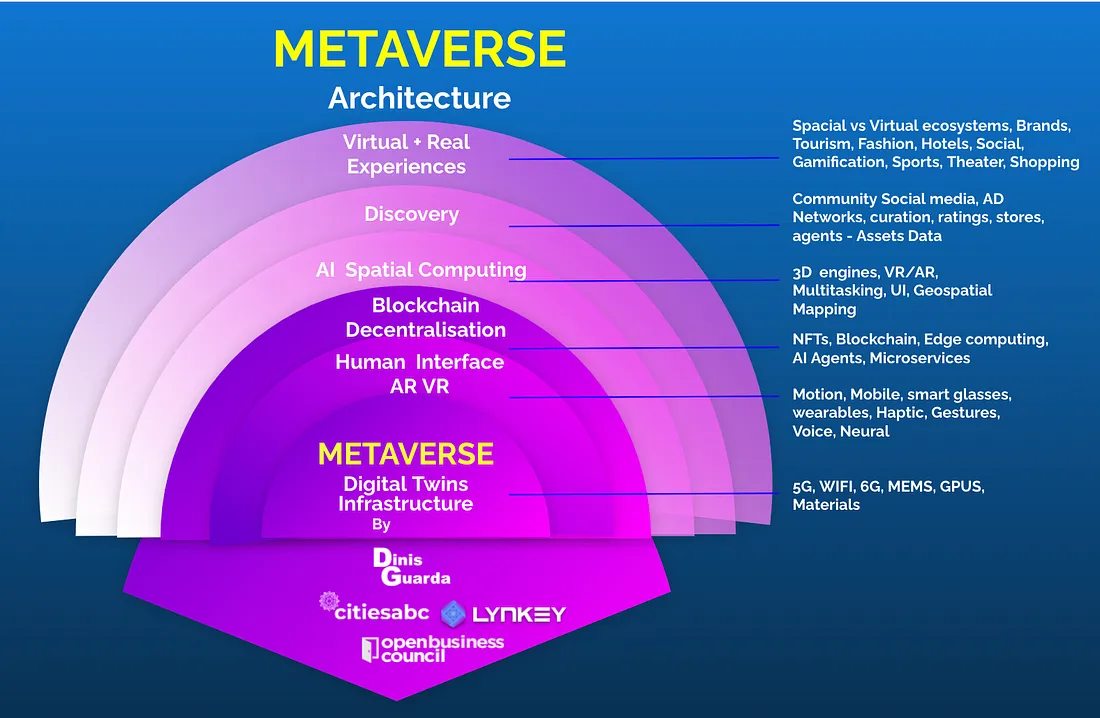

Spatial computing, often associated with augmented reality (AR) and virtual reality (VR), involves the interaction between the digital and physical worlds in real-time. When combined with artificial intelligence (AI), the capabilities of spatial computing are elevated to new heights. AI algorithms enhance spatial awareness, enabling systems to interpret and respond to the environment with greater precision. This synergy empowers applications ranging from immersive AR experiences to advanced robotics and autonomous systems.

AI Meets Spatial Computing: The trend

The ChatGPT for Vision Pro launch heralds a crucial moment in the integration of AI into spatial computing, presenting opportunities to revolutionise education, training, industry, entertainment, and more. The VisionOS app, available for free, hints at future capabilities like Spatial Audio, biometric verification, and VisionKit for developing multimodal apps. Apple’s CEO, Tim Cook, alluded to forthcoming GenAI progress and a significant OS upgrade, emphasising a focus on “edge processing” alongside VisionOS.

Google joins the race with plans to rebrand its Bard chatbot as “Gemini” and introduce the advanced Ultra model, competing directly with GPT-4 in the multimodal AI arena. Google’s Gemini Advanced will leverage the Ultra 1.0 model for multimodal interactions accessible via an Android app, unifying interactions across text, voice, and images.

Meanwhile, the cryptic Project Iris, aimed at a 2024 headset launch, aligns with Samsung‘s collaboration with Qualcomm and Google on a mixed-reality headset, suggesting Google’s entry into Android app-based mixed-reality headsets.

Other notable players include Meta‘s Reality Labs projecting the Quest 3 and Ray-Ban AI Smart Glasses, Sony and Siemens’ Industrial Metaverse-focused spatial computing headset, Microsoft and Siemens’ “Industrial Copilot” powered by OpenAI, and Alibaba‘s multimodal Vision AI model, touted as surpassing GPT-4 in certain visual tasks by some experts.

The future of human-AI interaction: A glimpse

The launch of ChatGPT for Vision Pro not only signifies a major achievement for OpenAI in its quest to develop beneficial AI for humanity but also offers a glimpse into the future of human-AI interaction. The vision includes more natural, intuitive, and immersive interactions where users can dictate their needs to AI assistants through speech and provide real-world images effortlessly for problem-solving.

Imagine redesigning the overall interiors of a house by asking the AI assistant to utilise the existing aesthetics of the environment.

With a driving passion to create a relatable content, Pallavi progressed from writing as a freelancer to full-time professional. Science, innovation, technology, economics are very few (but not limiting) fields she zealous about. Reading, writing, and teaching are the other activities she loves to get involved beyond content writing for intelligenthq.com, citiesabc.com, and openbusinesscouncil.org