AI is one of the most used buzzwords we hear all the time these days. AI’s “trendiness” is not difficult to understand either. One decade ago, most scientists were just working in their labs, improving the technology in order to make it applicable to some practical outputs. As we enter 2019, we have now a technology in our hands and pockets, capable of voice recognition, synthesised voices, fake imagery using augmented reality, driverless trains and cars… AI is now part of our daily lives, even if we don’t recognise it as such. Just think about google assisted email-writing Smart Compose, that anticipates your sentences. That is AI, facilitated by machine learning!

AI’s quick evolution, has now brought to the table a whole array of new issues, that were not considered in detail previously, particularly in terms of ethics. It has awakened as well the proverbial anxiety of man versus the machine. Is the machine helping women and men, or is it a rival ?

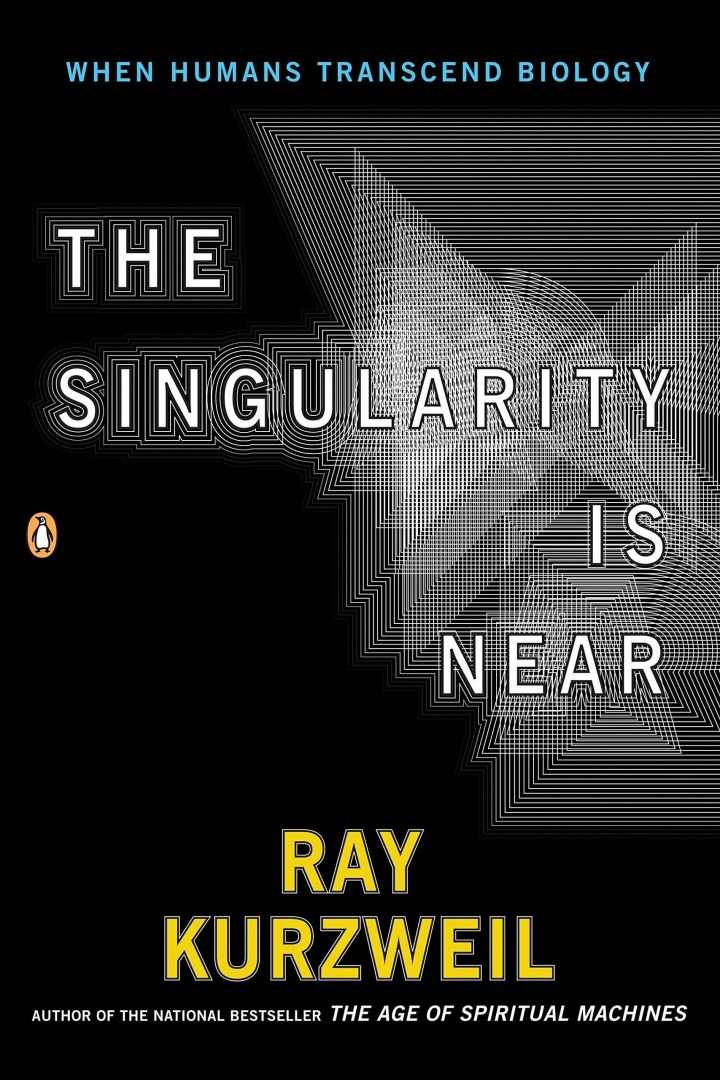

Inventor and futurist Ray Kurzweil, wrote precisely about that in his book from 2005 “The Singularity Is Near: When Humans Transcend Biology”. The book tackled the relationship between artificial intelligence and the future of humanity, and according to Ray, in 2029 AI will have reached the intelligence of an adult human.

We only learn by doing, so its when AI goes “practical” that we see what problems it brings. Last year, in the aftermath of scandals like Cambridge Analytica and others, important issues concerning ethics and AI came to the forefront. People became more aware of the dangers of AI if not clearly regulated, and big tech companies were pressured to take a stand in terms of its ethics, in a number of projects. In a recent article from Wired, some of those projects are outlined.

One example is Project Maven, that led thousands of Google employees protesting the renewal of the contract with this Pentagon AI Project. The project enables algorithms that help war fighters identify potential ISIS targets in video from drones. Google stated its technology had “nonoffensive” uses, but after the protest of more than 4,500 employees, the company decided not only to withdraw the renewal of the contract (which finishes in 2019, so lets see if that happens) but also to write a clear set of principles about its use of AI.

Supposedly Google’s guidelines clearly state how the company is not creating systems that reinforce societal biases on gender, race, or sexual orientation, or AI systems for use in weapons or “other technologies whose principal purpose or implementation is to cause or directly facilitate injury to people”.

If these will be followed or not, that is what is yet to be seen. Plus, it is important to realise that Google is able to venture into machine learning and complex AI because of the billions of data they are constantly gathering… from all of us… its users. That data is privately owned … by the company, which is questionable, and in itself another ethical question.

Not only Google but also Facebook is becoming more aware of the ethical implications of their work with AI. Joaquin Candela, Facebook’s director of applied machine learning, said earlier this year, speaking about some AI applications: “I started to become very conscious about our potential blind spots.”

Facebook has created recently a group to work on making AI technology ethical and fair. One of its projects is a tool called Fairness Flow that helps engineers check how their code performs for different demographic groups, say men and women.

Facial recognition is also another AI area where lots of concerns have been raised, and all kinds of biases discovered, particularly with how it operates with darker faces. So this has been an area of AI where tech companies have really focused on setting up boundaries.

To better deal with the huge problems AI brings the world, tech companies made an industry consortium entitled Partnership on AI, that aims to work on the ethics and societal impact of the technology. The consortium joins together companies, academics, scholars and researchers.

Even if this is a good and important step, unfortunately, we have to be aware that in big companies, the wish to secure contracts, surpasses ethical concerns lots of times. Recently, Microsoft, announced a contract with US Immigration and Customs Enforcement, that had the goal of helping the agency deploy AI and facial recognition. Microsoft’s employees protested but the project moved forward.

As AI evolves at rapid space it is expected that in 2019 even more ethics questions will arise. It is up to all of us, to be aware of what is going on and take conscious action, so we make it work in a way that is ethical and fair.

Maria Fonseca is the Editor and Infographic Artist for IntelligentHQ. She is also a thought leader writing about social innovation, sharing economy, social business, and the commons. Aside her work for IntelligentHQ, Maria Fonseca is a visual artist and filmmaker that has exhibited widely in international events such as Manifesta 5, Sao Paulo Biennial, Photo Espana, Moderna Museet in Stockholm, Joshibi University and many others. She concluded her PhD on essayistic filmmaking , taken at University of Westminster in London and is preparing her post doc that will explore the links between creativity and the sharing economy.