- The world’s first comprehensive AI law, the EU AI Act, focuses primarily on strengthening rules around data quality, transparency, human oversight and accountability while addressing the ethical questions and implementation challenges in various sectors.

- A team at IBM under the leadership of Armand Ruiz, Director of AI, develops a GPT tool to assist in evaluating projects for compliance with the EU AI Act.

The European Commission first introduced the inaugural regulatory framework for AI within the EU in April 2021. As a component of its digital strategy, the European Union (EU) focussed to establish regulations for artificial intelligence (AI) to enhance the conditions surrounding the development and application of the revolutionary technology. EU Commissioner for Internal Market, Thierry Breton, said in a statement when the legislation was first proposed in 2021:

“[AI] has been around for decades but has reached new capacities fuelled by computing power.”

Armand Ruiz, the Director of AI at IBM, has crafted a GPT-leveraged tool that is specifically designed to facilitate a comprehensive analysis of projects for compliance with the EU AI Act. This innovative tool serves as a valuable resource for businesses, providing them with a structured approach to assess and ensure the alignment of their AI initiatives with the intricate requirements stipulated by the regulatory framework.

The EU AI Act: Mitigating risks while promoting ethical use

The multifaceted potential benefits of artificial intelligence (AI) span a wide spectrum, encompassing improvements in healthcare, advancements in transportation safety, enhanced efficiency in manufacturing processes, and the development of cost-effective, sustainable energy solutions.

However, alongside these promising advantages, AI also introduces a myriad of threats that reverberate across various sectors of business and commerce. These threats underscore the importance of carefully navigating the deployment and regulation of AI technologies to mitigate risks and ensure responsible and ethical usage in a rapidly evolving technological landscape.

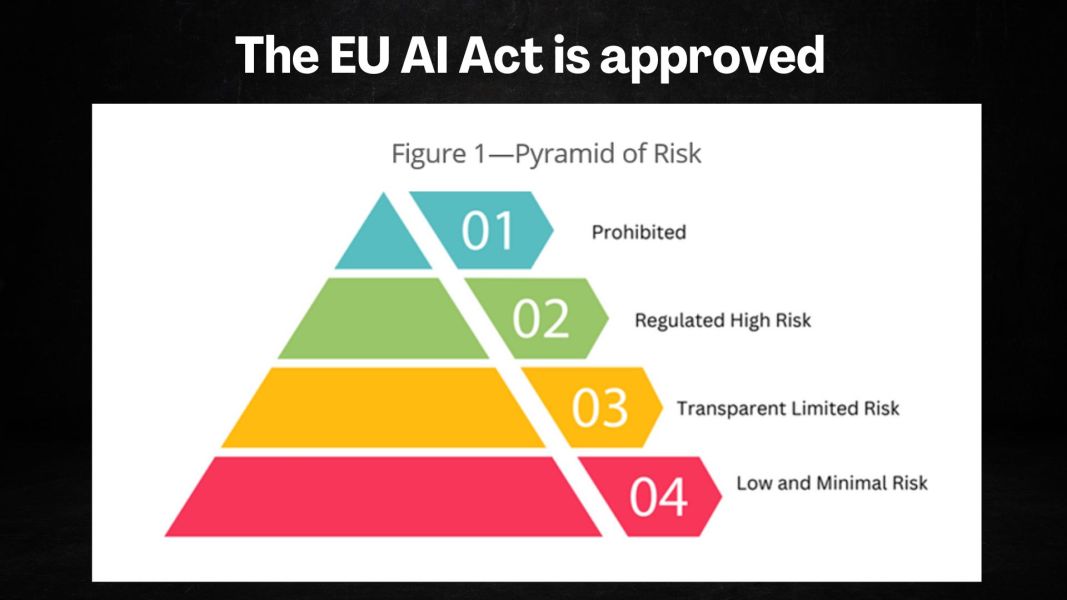

The Artificial Intelligence Act is a legal framework that aims to significantly bolster regulations on the development and use of artificial intelligence. The framework involves a meticulous analysis and classification of AI systems that can be utilised across various applications, based on the level of risk they pose to users. The degree of risk, the commission emphasised, would determine the extent of regulatory measures applied.

The EU AI Act: Prohibitions

The EU AI Act explicitly prohibits certain AI systems. It expressly forbids the development, deployment, and utilisation of specific AI systems to safeguard fundamental rights and ethical considerations. Among the explicitly prohibited applications are social credit scoring systems, preventing the deployment of algorithms that assign numerical scores based on individuals’ social behavior, a practice associated with privacy concerns and potential societal manipulation. The EU AI Act also restricts the real-time biometric identification of individuals in public spaces, except under limited, pre-authorized situations. This limitation aims to curb intrusive surveillance practices, protecting individuals from unwarranted scrutiny and ensuring privacy in public domains.

The EU AI Act: High risk AI systems

High-risk AI systems, as defined by the EU AI Act, encompass applications that carry a substantial risk to fundamental rights and safety. This classification is extensive and includes AI systems deployed in critical sectors, such as medical devices, vehicles, recruitment and human resources management, education and vocational training, electoral processes, access to various services (e.g., insurance, banking, credit, benefits), and the management of critical infrastructure.

The categorisation is strategic, focusing on domains where the potential consequences of AI malfunction or misuse could have severe societal impacts.

Providers and users of high-risk AI systems are subject to a comprehensive set of requirements outlined by the EU AI Act to ensure responsible and ethical deployment. Firstly, they are mandated to conduct a fundamental rights impact assessment and conformity assessment, evaluating the potential implications of the AI system on individuals’ rights and ensuring compliance with established norms.

The Act necessitates the registration of high-risk AI systems in a public EU database, fostering transparency and accessibility of information. Providers and users must implement robust risk management and quality management systems to mitigate potential risks, coupled with data governance measures like bias mitigation and representative training data to address ethical considerations.

The EU AI Act imposes specific transparency requirements on high-risk AI systems, demanding clear instructions for use and comprehensive technical documentation. The incorporation of human oversight mechanisms, such as explainability, auditable logs, and human-in-the-loop functionalities, is crucial to ensure accountability and ethical use.

Accuracy, robustness, and cybersecurity are pivotal, with testing and monitoring stipulations in place to guarantee the reliability and security of these high-risk AI systems throughout their lifecycle. These stringent requirements aim to instill a framework that prioritises ethical considerations, safeguards fundamental rights, and minimizes risks associated with the deployment of high-risk AI technologies within the European Union.

The EU AI Act: GPAI Systems

General Purpose AI (GPAI) systems, as outlined by the EU AI Act, are versatile AI applications designed for diverse tasks without requiring extensive retraining. The Act introduces specific requirements tailored for GPAI systems, emphasising transparency and addressing potential systemic risks associated with high-impact models.

Transparency requirements encompass various aspects, including the provision of comprehensive technical documentation, disclosure of training data, clarification of limitations, adherence to copyright regulations, and the implementation of safeguards.

The EU AI Act imposes supplementary obligations for high-impact models within the GPAI category. These include rigorous model evaluations, systematic risk assessments, adversarial testing to identify vulnerabilities, and incident reporting mechanisms to promptly address and rectify any unforeseen issues.

The penalties and enforcement mechanisms in the EU AI Act

The EU AI Act clearly outlines robust compliance measures. In cases of prohibited AI violations, entities may face substantial fines equivalent to up to 7% of their global annual turnover or a fixed amount of €35 million, emphasising the severity of breaches that involve prohibited AI applications. For other violations falling outside the scope of prohibited AI, penalties remain substantial, with potential fines reaching up to 3% of the global annual turnover or a fixed amount of €15 million.

The significant financial repercussions act as a powerful deterrent, encouraging responsible development, deployment, and use of AI systems within the outlined guidelines.

The EU AI Act compliance analysis: A GPT tool developed by IBM

A team of AI developers at IBM, under the direction of Armand Ruiz, the Director of AI at IBM, has introduced a GPT tool designed to streamline and enhance the compliance assessment process for projects under the purview of the EU AI Act.

This innovative tool represents a pivotal advancement in the field, offering businesses a comprehensive solution for evaluating the adherence of their AI initiatives to the regulatory mandates outlined in the EU AI Act.

Expressing his opinion on the EU AI Act, he Armand says:

“The EU AI Act appears less stringent than anticipated. The law imposes some limits on foundation models but offers significant leeway to “open-source models” — those built with publicly modifiable code. This could advantage open-source AI firms in Europe that opposed the legislation, such as France’s Mistral and Germany’s Aleph Alpha, and even Meta, which introduced the open-source model LLaMA.”

Leveraging the capabilities of GPT technology, the tool is engineered to provide businesses with detailed insights and guidance, facilitating a thorough examination of projects to ensure they align seamlessly with the stringent regulatory framework.

Hernaldo Turrillo is a writer and author specialised in innovation, AI, DLT, SMEs, trading, investing and new trends in technology and business. He has been working for ztudium group since 2017. He is the editor of openbusinesscouncil.org, tradersdna.com, hedgethink.com, and writes regularly for intelligenthq.com, socialmediacouncil.eu. Hernaldo was born in Spain and finally settled in London, United Kingdom, after a few years of personal growth. Hernaldo finished his Journalism bachelor degree in the University of Seville, Spain, and began working as reporter in the newspaper, Europa Sur, writing about Politics and Society. He also worked as community manager and marketing advisor in Los Barrios, Spain. Innovation, technology, politics and economy are his main interests, with special focus on new trends and ethical projects. He enjoys finding himself getting lost in words, explaining what he understands from the world and helping others. Besides a journalist, he is also a thinker and proactive in digital transformation strategies. Knowledge and ideas have no limits.