While emphasising the capabilities of artificial intelligence, it is crucial to acknowledge its dual nature. AI not only serves to enhance various aspects of our lives but also operates as a tool that can be exploited by malicious actors.

The rapidly evolving threat landscape associated with AI is a cause for concern. From January to February 2023, researchers at Darktrace noted a significant 135% surge in instances of “novel social engineering” attacks, aligning with the widespread adoption of ChatGPT.

There is an imperative need to strengthen defenses against the malicious use of Artificial Intelligence (AI) in cyberattacks. Adversaries have gained the capability to leverage AI for executing targeted cyberattacks with unparalleled precision. The ever-changing landscape of AI-driven attacks frequently makes traditional static defense mechanisms ineffective.

Conventional cybersecurity measures like signature-based antivirus software, firewalls, and rule-based intrusion detection systems find it challenging to stay ahead. It is, therefore, necessary to find more adaptive and advanced cybersecurity strategies.

With the increasing autonomy and sophistication of AI systems, the threat landscape undergoes a significant transformation, emphasising the urgency of taking immediate measures to prevent disruptions.

The dark side of AI: Common AI scams

Recent revelations shed light on three common AI scams that individuals should remain vigilant against.

Cloned voices of loved ones is a distressing trend that has contributed to a surge in fraud-related losses. Between 2022 and 2023 alone, Americans have lost nearly $9 billion to frauds, a 150% increase over just two years, according to the Federal Trade Commission. A particularly harrowing incident involved scammers using a computer-generated voice to impersonate a woman’s 15-year-old daughter.

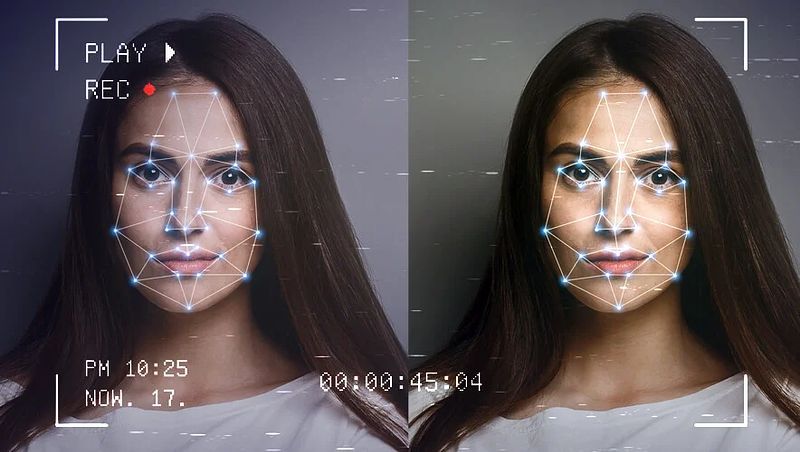

Creation of “deepfake” photos or videos, harnesses AI to generate entirely fabricated visual content. This includes the production of fake news videos designed to spread misinformation or generate content for malicious purposes. In Inner Mongolia, for instance, a scammer used face-swapping technology to impersonate a victim’s friend during a video call. The victim, believing the friend needed to pay a deposit for a bidding process, transferred a substantial amount of money to the scammer.

AI-generated emails and texts that appear highly personalised, often mimic communication from family members, friends, or employers. This poses a significant risk to personal and corporate security.

Chelsea Alves, a Consultant at UNmiss, shares her thoughts on how and why scammers are leveraging AI to deceive consumers:

“Unfortunately deceptive consumer practices, such as scamming, have long haunted businesses and consumers alike. Scammers were quick to notice the power and sophistication that AI offers in creating convincing and personalized fraudulent schemes as well as how well these schemes work. As we navigate this evolving landscape, it’s imperative for consumers to not only be skeptical but also to stay informed about the latest AI-driven scamming techniques.”

How consumers are protecting themselves from deceptive schemes

To adeptly navigate the risks associated with AI scams, consumers should cultivate a fundamental understanding of AI’s role in deceptive practices. One approach is to anticipate potential risks by refraining from clicking on links without verifying the sender’s email address. For example, individuals can avoid falling victim to phishing scams by cross-referencing the sender’s details and being cautious when encountering unexpected or suspicious messages.

Families and business organisations can also create a unique “safe word” for emergency verification. This precautionary measure ensures that individuals can confirm the authenticity of communications in critical situations. For instance, a family might establish a specific code or phrase to use during emergencies, preventing them from falling prey to AI-generated messages seeking urgent assistance.

Consumers are also advised to exercise caution when faced with offers that seem too good or too bad to be true. Instances of fraudulent schemes promising incredible deals or exploiting fears often involve AI-generated content. By being discerning and skeptical of such offers, individuals can safeguard themselves from falling victim to deceptive tactics.

The golden rule, however, is that consumers should never divulge personal or financial information in response to unsolicited communications, even if they seemingly originate from trusted sources. This principle helps mitigate the risks of identity theft and financial fraud.

With a driving passion to create a relatable content, Pallavi progressed from writing as a freelancer to full-time professional. Science, innovation, technology, economics are very few (but not limiting) fields she zealous about. Reading, writing, and teaching are the other activities she loves to get involved beyond content writing for intelligenthq.com, citiesabc.com, and openbusinesscouncil.org