A human eye is one of the most amazing organs in our body. It holds between six and seven million cone cells, all of which contain colour-sensitive proteins commonly referred to as opsins. These proteins respond differently to different light wavelengths triggering a cascade that is then transmitted to our brains for interpretation.

A human eye is one of the most amazing organs in our body. It holds between six and seven million cone cells, all of which contain colour-sensitive proteins commonly referred to as opsins. These proteins respond differently to different light wavelengths triggering a cascade that is then transmitted to our brains for interpretation.

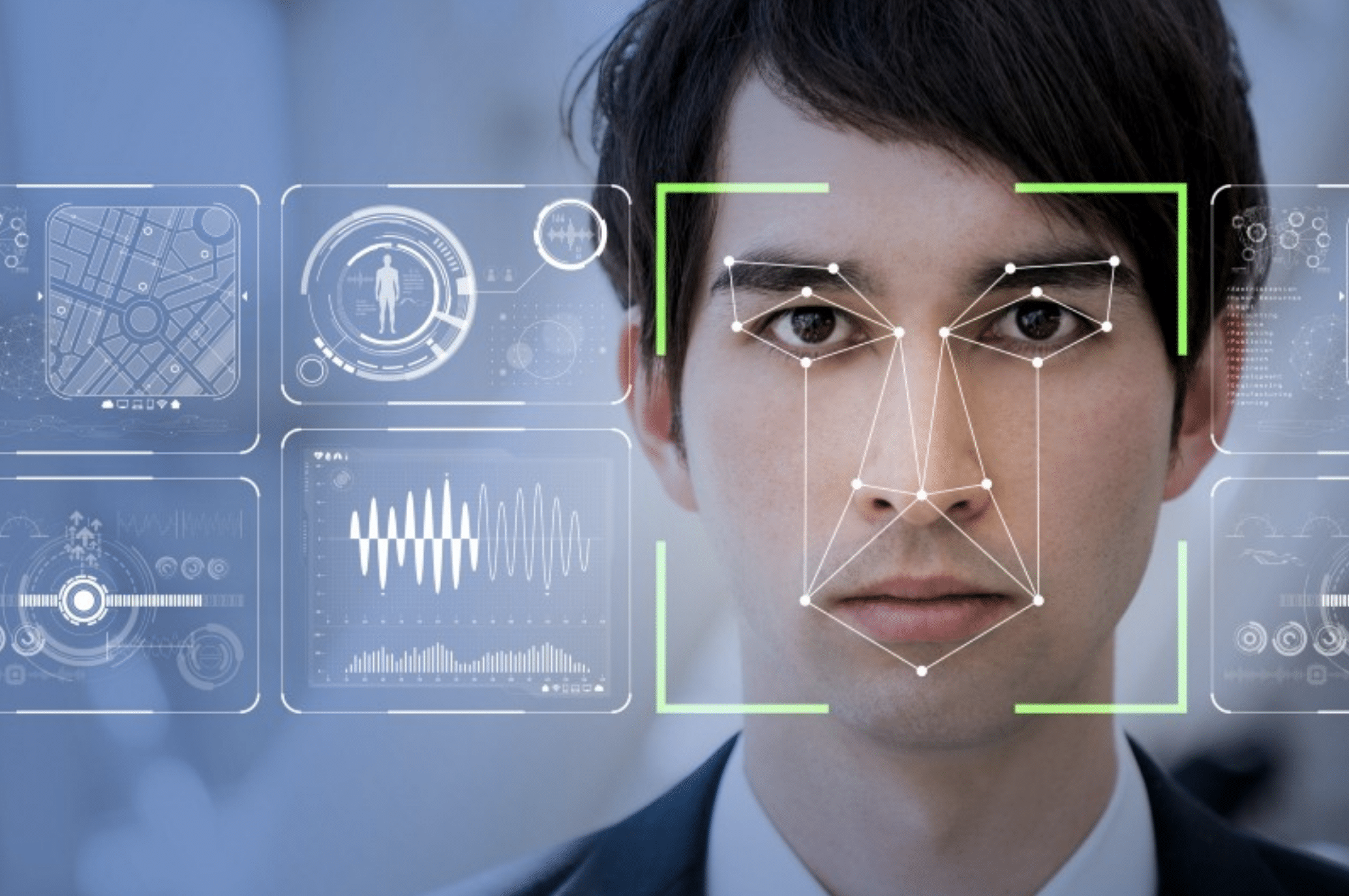

This entire process is a super-complex phenomenon and trying to emulate it using a machine is even harder. For many years, the human eye has been the sole motivation behind what we call the machine vision system. Basically, machine vision entails emulating human vision through facial recognition, pattern recognition, and rendering 2D images into the 3D world.

What most people don’t know is that OpenCV Java has immensely contributed to the growth of computer vision.

In this article, we are going to look at the impact of OpenCV on computer vision, merits and demerits of this library, and tips that can help Java OpenCV engineers improve their work.

What is OpenCV?

Open Source Computer Vision Library (OpenCV) is an open-source machine learning and computer vision software library. This library allows developers to build computer vision applications and encourage the utilization of machine learning in commercial products.

Thanks to the multiple optimized algorithms, OpenCV in Java has been extensively used in a myriad of fields including facial recognition, tracking camera movements, producing high-resolution images, extracting 3D models of multiple objects, recognize scenery, etc.

The versatility of OpenCV is one to be admired. For starters, it features Java, C++, Python, and MATLAB interfaces making interaction easier. Additionally, the library supports Windows, Linux, Mac OS, and Android which means that as a Java OpenCV engineer, you don’t need to worry about the device you use.

Key among bluechip companies using the OpenCV library include Google, Yahoo, Microsoft, IBM, Intel, and Sony. OpenCV has also been deployed by governments in stitching street view images and monitoring mining equipment.

Now, let us turn our lens to the idea behind this article – 6 secrets of Java OpenCV engineer work! We will look at OpenCV from the perspective of a Java engineer and learn some tips that can help make their work easier.

OpenCV and Facial Recognition

Over the last decade, facial recognition has emerged as a major area of focus for researchers. Facial recognition systems utilizer computer algorithms to profile and pick out specific facial details of a person’s face.

Common facial details picked by these facial recognition systems include the chin’s shape and the distance between the eyes. These details are consequently converted into mathematical connotations which are then correlated with similar data stored in the face recognition database.

As an OpenCV motion detection Java engineer looking to build a facial recognition system or a motion detection system, you can use either Eigenface recognizer, Fisherface recognizer or LBPH face recognizer to extract face embeddings.

Below is an OpenCV Java face detection sample:

def prediction (test_img): else: #get name of respective label returned by face recognizer draw_text (test_img, label, rect[0], rect[1]-5,confidence) #load test images #perform a prediction |

Resizing of Images Using OpenCV

Most machine learning models operate under a fixed sized input. This same principle also applies to computer vision models. Although this may sound ideal, it presents a problem if you are creating your own datasets, especially when you are scraping images from different sources. And that’s where Java OpenCV comes in.

Java OpenCV engineers can easily scale up and down images using OpenCV. In deep learning models, they can use this technology to interpolate images to suit the specific model’s input shape using the following parameters:

INTER_NEAREST: This is the nearest neighbour interpolation.

INTER_LINEAR: Which is the bilinear interpolation.

INTER_AREA: Used to resample images using pixel area relation.

INTER_CUBIC: Bicubic interpolation.

INTER_LANCZOS4: Interpolation exceeding 8×8 neighbourhood

With that said, there is the default OpenCV’s resize function which uses bilinear interpolation. Here is a sample code:

#import cv2 |

Changing Color Space

A colour space is basically a protocol used to represent colours in a manner that makes them easily recognizable. Grayscale images are represented by a single pixel value with each pixel containing 3 colour channels – Red, Blue, and Blue.

Therefore, most computer vision systems utilize the RGB format to process images. However, independent storage devices and video compression applications are heavily dependent on supplementary color spaces such as HSV color space and Hue-Saturation.

As you may be well aware, the RGB image is prone to different color intensities depending on the color channels being used. For example, in RGB color space, color information and intensity have a symbiotic relationship RGB color space. On the other hand, the two are clearly demarcated in HSV. As such, Java OpenCV engineers prefer HSV color space over RGB format as it more robust in relation to the lighting changes.

Although OpenCV by default decodes images in the BGR format, as an OpenCVS engineer, here is a nugget on how to change the color format from BGR to RGB:

#import the required libraries |

OpenCV Python Performance Optimization in NumPy and IPython

Image processing entails dealing with multiple operations per second. This means that your code should not only provide the solution but also do it in the fastest manner possible.

Generally, NumPy operations are mostly implemented in C which gets rid of all the expensive Python overhead loops. This problem is a result of difficulties in accessing individual pixels as this isn’t a vector operation. Accordingly, even though NumPy has been touted as one of the best numerical processing libraries, the performance gains are greatly reduced when it’s combined with individual element accesses and Python for loops.

When a developer is using NumPy, they are able to boost the system’s performance by multiple orders as opposed to when using the standard Python lists.

Along with the computer vision journey, Java OpenCV developers also do deploy algorithms that require these loops to be performed manually.

Over the years, several coding methods and techniques that developers use to boost the performance of Numpy and Python have merged. But before we look at them it’s important to mention one thing; The key lies in trying to implement the algorithm initially. Once deployed and working, you can then profile it, troubleshoot the bottlenecks and then optimize them.

And now to the performance boost methods:

- Double and triple loops are inherently slow. As a developer avoid them as much as you can.

- Vectorize the code as much as possible. This is because OpenCV and because Numpy is vector operations optimized.

- Avoid duplicating of array unless when needed as they can be costly. Instead, you can opt to use views.

- Leverage on the cache coherence.

Image Segmentation

Image segmentation is particularly useful in the OpenCV template matching Java. It involves profiling individual pixels in an image. For instance, pixels can either be classified as foreground or background.

One of the best and commonly used segmentation algorithms by CV engineers is called a watershed. It puts into consideration all the pixel values in an image and relays them as a topography. Here is how the watershed algorithm image segmentation code looks like.

#importing required libraries #reading the image dist_transform = cv2.distanceTransform(opening, cv2.DIST_L2,5) sure_fg = np.uint8(sure_fg) ret, markers = cv2.connectedComponents(sure_fg) markers = markers+1 markers[unknown==255] = 0 markers = cv2.watershed(image,markers) plt.imshow(sure_fg) |

Edge Detection

Edge detection is a technique used by CV engineers to find boundaries within images and image sharpening. It works by detecting discontinuities in brightness, depth, surface orientation, changes in the real properties of an image or video.

Edges are useful features as they can be used to classify different objects within an image. Deep learning models are used to calculate edge features after which information concerning the different objects in a particular image is extracted.

It’s also important to mention that edges are not the same as contours. This is because they aren’t related to the objects within an image. On the contrary, they express the variations in pixel values.

#import the required libraries |

Advantages of OpenCV

- The open-source nature makes it easy to install OpenCV.

- Fast thanks to the utilizations of C/C++ programming languages.

- Low RAM usage.

- Portable meaning it can run on any device.

Demerits of OpenCV

- Compared to MATLAB, OpenCV is not easy to use.

- OpenCV comes with a personalized Flann library. As a result, engineers are bound to run into problems due to conflict issues when using a PCL library.

Java OpenCV Engineer Skill Set and Experiences

A Java OpenCV developer/ engineer needs to be armed with a multi-faceted skillset in robotics, machine learning, and control systems to be able to solve all product development challenges. Additionally, they must be good in linear algebra, math, and statistics which are key ingredients in pattern recognition skills and image processing. Other supplementary skills include C/C++ for purposes of testing and geometry base for computer vision applications.

Aptness in video analytics algorithms and 3D computer vision and reconstruction, tracking and classification, and object and motion detection are also added as an advantage.

Bottom Line

Correlating with other emerging technologies like AI, computer vision has received massive support from the developing world in recent years. Vivid demonstrations of this technology such as Google’s Deep Learning “paintings” and such as FaceApp continue to make headlines in both mainstream and non-traditional media.

For an average Joe, it may OpenCV may sound like a moment of amusement. However, this library has demonstrated that with the availability of other supporting technologies and relative;y cheap processing power, the CV approach can be scaled ubiquitously.

This is an article provided by our partners’ network. It does not reflect the views or opinions of our editorial team and management.

Contributed content

Author Bio

Anastasia Stefanuk is a passionate writer and Information Technology enthusiast. She works as a Content Manager at Mobilunity, a provider of dedicated development teams around the globe. Anastasia keeps abreast of the latest news in all areas of technology, Agile project management, and software product growth hacking, at the same time sharing her experience online to help tech startups and companies to be up-to-date.

Anastasia Stefanuk is a passionate writer and Information Technology enthusiast. She works as a Content Manager at Mobilunity, a provider of dedicated development teams around the globe. Anastasia keeps abreast of the latest news in all areas of technology, Agile project management, and software product growth hacking, at the same time sharing her experience online to help tech startups and companies to be up-to-date.

Founder Dinis Guarda

IntelligentHQ Your New Business Network.

IntelligentHQ is a Business network and an expert source for finance, capital markets and intelligence for thousands of global business professionals, startups, and companies.

We exist at the point of intersection between technology, social media, finance and innovation.

IntelligentHQ leverages innovation and scale of social digital technology, analytics, news, and distribution to create an unparalleled, full digital medium and social business networks spectrum.

IntelligentHQ is working hard, to become a trusted, and indispensable source of business news and analytics, within financial services and its associated supply chains and ecosystems