In this non-stop innovation times we are currently living, it is coming harder to amaze over new tech developments that are yet to come. In fact, most of the upcoming technologies are based on AI and the different ways of how it will be implemented in real-life scenarios and in a short-term distance.

Actually, it seems almost trivial whether if we are about to change the way life is created or building up new whole smart-cities from the ground, or even printing metal sheets in the comfort of the living room… Maybe we are getting too used to live in this sci-fi like world, but the truth is that these techs, among others we will speak later on, are about to change -some are already changing- our world in this 2018.

But above all, Artificial Intelligence seems to be in the favour of most giant technology companies. It has been seen as a sort of the main solution of all privacy problems that have been biting hard the sector lately as well as the beacon to follow when knowing user’s habits deeply and customised. It is like killing two birds with one stone for companies such Microsoft or Google, and in the current year, they are about to deepen in the implementation of AI in a whole new variety of ways according to a recent MIT Technology review.

Main Technology Breakthroughs

1. Building up the sensing city

Numerous smart-city schemes have run into delays, dialed down their ambitious goals, or priced out everyone except the super-wealthy. A new project in Toronto, called Quayside, is hoping to change that pattern of failures by rethinking an urban neighbourhood from the ground up and rebuilding it around the latest digital technologies.

As said in the MIT article, one of the project’s goals is to base decisions about design, policy, and technology on information from an extensive network of sensors that gather data on everything from air quality to noise levels to people’s activities.

The plan calls for all vehicles to be autonomous and shared. Robots will roam underground doing menial chores like delivering the mail. It will open access to the software and systems it’s creating so other companies can build services on top of them, much as people build apps for mobile phones.

2. Taking the AI to the Clouds

One of the biggest cons when it comes to implement AI algorithms in apps, webs and platforms is their cost. It is seen in the tech sector as a luxury good only for specialized companies and big teams to fully implement it. However, that is about to change.

Machine-learning tools based in the cloud are bringing AI to a far broader audience. So far and according to MIT, Amazon dominates cloud AI with its AWS subsidiary. Google is challenging that with TensorFlow, an open-source AI library that can be used to build other machine-learning software. You can check out some of the online Tensorflow courses here. Also, recently Google announced Cloud AutoML, a suite of pre-trained systems that could make AI simpler to use.

Microsoft, which has its own AI-powered cloud platform, Azure, is teaming up with Amazon to offer Gluon, an open-source deep-learning library. Gluon is supposed to make building neural nets—a key technology in AI that crudely mimics how the human brain learns—as easy as building a smartphone app.

It is uncertain which of these companies will become the leader in offering AI cloud services. But it is a huge business opportunity for the winners.

3. Dueling Neural Networks

Artificial intelligence is getting very good at identifying things: show it a million pictures, and it can tell you with uncanny accuracy which ones depict a pedestrian crossing a street. But AI is hopeless at generating images of pedestrians by itself. If it could do that, it would be able to create gobs of realistic but synthetic pictures depicting pedestrians in various settings, which a self-driving car could use to train itself without ever going out on the road.

The problem is, creating something entirely new requires imagination—and until now that has perplexed AIs.

However, a new solution called GAN has been now developed in Montreal. The approach, known as a generative adversarial network, or GAN, takes two neural networks—the simplified mathematical models of the human brain that underpin most modern machine learning—and pits them against each other in a digital cat-and-mouse game. Both networks are trained on the same data set. One, known as the generator, is tasked with creating variations on images it’s already seen—perhaps a picture of a pedestrian with an extra arm. The second, known as the discriminator, is asked to identify whether the example it sees is like the images it has been trained on or a fake produced by the generator.

Bonus Technology

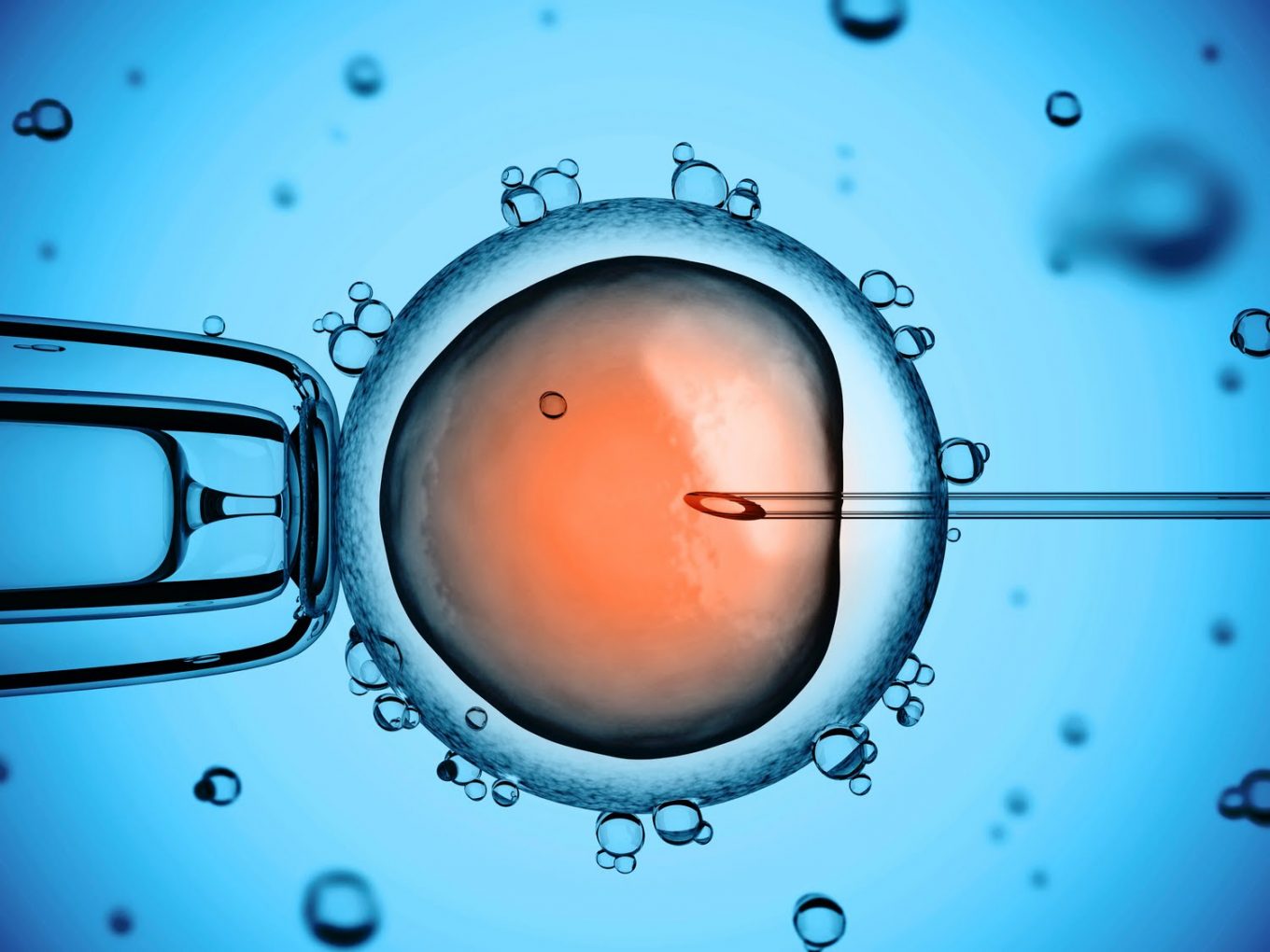

Artificial Embryos

In a breakthrough that redefines how life can be created, embryologists working at the University of Cambridge in the UK have grown realistic-looking mouse embryos using only stem cells. No egg. No sperm. Just cells plucked from another embryo.

The researchers placed the cells carefully in a three-dimensional scaffold and watched, fascinated, as they started communicating and lining up into the distinctive bullet shape of a mouse embryo several days old.

Synthetic human embryos would be a boon to scientists, letting them tease apart events early in development. And since such embryos start with easily manipulated stem cells, labs will be able to employ a full range of tools, such as gene editing, to investigate them as they grow.

Artificial embryos, however, pose ethical questions. What if they turn out to be indistinguishable from real embryos? How long can they be grown in the lab before they feel pain? We need to address those questions before the science races ahead much further, bioethicists say.

Hernaldo Turrillo is a writer and author specialised in innovation, AI, DLT, SMEs, trading, investing and new trends in technology and business. He has been working for ztudium group since 2017. He is the editor of openbusinesscouncil.org, tradersdna.com, hedgethink.com, and writes regularly for intelligenthq.com, socialmediacouncil.eu. Hernaldo was born in Spain and finally settled in London, United Kingdom, after a few years of personal growth. Hernaldo finished his Journalism bachelor degree in the University of Seville, Spain, and began working as reporter in the newspaper, Europa Sur, writing about Politics and Society. He also worked as community manager and marketing advisor in Los Barrios, Spain. Innovation, technology, politics and economy are his main interests, with special focus on new trends and ethical projects. He enjoys finding himself getting lost in words, explaining what he understands from the world and helping others. Besides a journalist, he is also a thinker and proactive in digital transformation strategies. Knowledge and ideas have no limits.