As artificial intelligence development marches on, unabated, there is the fear that we can soon face a technological singularity. Technological singularity means the idea of robots becoming more advanced and gaining super- intelligence, ultimately leading to technological growth that is unstoppable and independent from human action, resulting in crisis and unforeseen changes to human societies.

Ultimately what is at stake here is machines that are more intelligent then humans.

Narrow AI and General AI (AGI)

Experts who have analysed what AI can truly do or not, state that the technological scenario is not such a far fetched idea as it seems, particularly if one defines categories. One is what they call “narrow AI”, which means the possibility of surpassing human capabilities, but only in specific areas like playing games or generating images. In other areas, like translation, reading comprehension, or driving, machines can’t yet surpass humans — though some think they are getting closer. What is frightening to most is how and when “Narrow AI,” gives way to “general AI,” or AGI — systems that have human-level problem-solving abilities across many different domains. This, if we look at machines as separate from humans.

There is no general agreement around technological singularity, and when it will happen, if it ever happens.

Steven Pinker,a famous Harvard professor and author,&for example, feels that it will never happen. In his essay published in the recent book “Possible Minds” he writes peremptorily how “These scenarios are self-refuting,” arguing they stem from an assumption that an AI is “so imbecilic that it would wreak havoc based on elementary blunders of misunderstanding.”

Someone else looking at technological singularity with positive eye is Raymond Kurzweil, the widely know American author, computer scientist, and renowned futurist who is also the Director of Engineering at Google. He has been writing about it since 1999 and his outlook is quite optimistic. He writes:

“By the time we get to the 2040s, we’ll be able to multiply human intelligence a billionfold. That will be a profound change that’s singular in nature. Computers are going to keep getting smaller and smaller. Ultimately, they will go inside our bodies and brains and make us healthier, make us smarter.”

Kurzweil has been writing and research technological singularity since 1999, when he anticipated how computational capacity of computers would increase exponentially.

Kurzweil abides by the ideas of a philosophical group exploring transumanism that believes and investigates the potential for the human race to evolve beyond its current physical and mental limitations, especially by means of science and technology. Kurzweil’s research led him to explore and write about quite imaginative futuristic scenarios such as the one of nanotechnology augmenting our bodies and making us reach immortality by getting rid of terminal diseases even if that implies that humans connect to computers via direct neural interfaces or would live full-time in virtual reality. Kurzweil also wrote how machines “will appear to have their own free will” and even have “spiritual experiences”. Which again, makes us ponder on the frontiers of what is a machine or a human.

Kurzweil has been spot on around predictions over the past 20 years, as it seems that he is able to extrapolate technology trends, and provide readers with well articulated explanations. However, there is disagreement on whether computers will one day be conscious and this clashes to the current debate around technological singularity.

Some of his opponents are philosophers John Searle and Colin McGinn. These don’t believe that AI on its own will evolve into a fully conscious machine. Searle for example, basis his arguments on the Chinese room theory. His view point is that computers can only manipulate symbols which are meaningless to them.

A new type of humans?

Kurzweil’s positive view point upon technological singularity, led him to the transhumanist ideas (abbreviated as H+ or h+). Transhumanist thinkers study the potential benefits and dangers of emerging technologies that could overcome fundamental human limitations as well as the limitations of using such technologies. The most common transhumanist thesis is that human beings may eventually be able to transform themselves into different beings with abilities greatly expanded from the current condition.

On one hand this is no big deal news… After all, we did move a bit, from Human austrolopitecus to what we are now, due to all the technological inventions we made along the day … which design us back. What is interesting and novel now, is that we have the consicousness to regulate those changes and evolutions. Transhumanistic views are also more conscious that AI is not separate from humans, but resulting from data exchanged with humans.

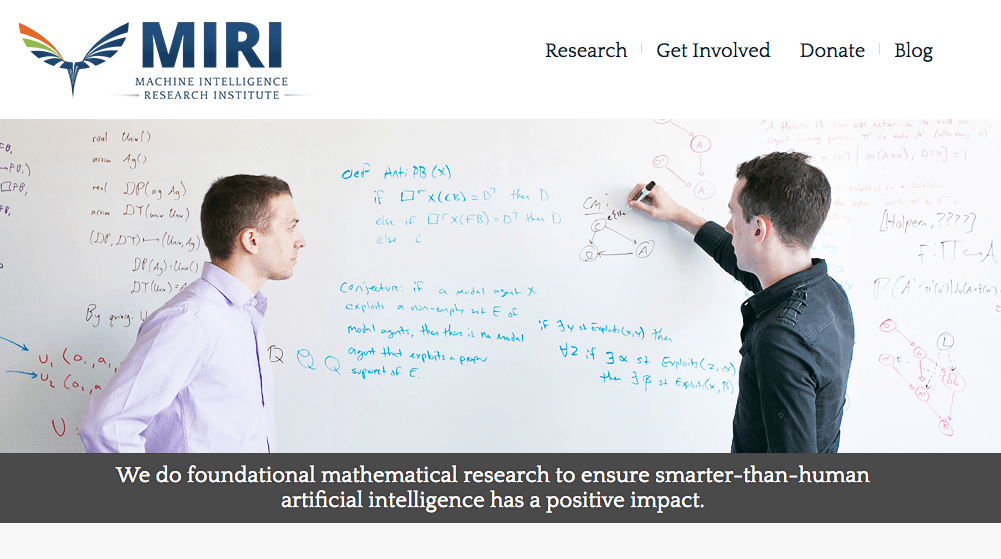

One of the key institutions exploring transhumanism is The Machine Intelligence Research Institute (MIRI), formerly the Singularity Institute for Artificial Intelligence(SIAI), a non-profit organization founded in 2000 by Eliezer Yudkowsky. MIRI’s work has focused lately on a friendly AI approach to system design and on predicting the rate of technology development and its risks, moving cautiously away from the full on optimism around AI to the notion that disruptive technological development needs to be happen for the benefit of humans (and other nature’s species) and not the other way around.

Challenges of Technological Singularity

Other views tend to look at singularity with gloomy eyes! Stephen Hawking wrote how it could bring an end to the human race, others, fearfully warning us how artificial general intelligence (AGI) could be developed within two to three decades time. In his view point, Artificial general intelligence would then continually be able to improve itself leading to artificial super intelligence, which would be ever expanding, since the limits to this process are unknown. That would lead us to the famous technological singularity cited previously.

While not everyone thinks technological singularity will ever happen, particularly in those terms (of humans versus machines, and thinking of machines as separate from humans) this naturally raises obvious questions about where we are going as the human race.

Getting Ready For The Tsunami: AI Evolution, Blockchain and Technological Singularity – Part 1

Getting Ready For The Tsunami: AI Evolution, Blockchain and Technological Singularity Part 3

Dinis Guarda is an author, academic, influencer, serial entrepreneur, and leader in 4IR, AI, Fintech, digital transformation, and Blockchain. Dinis has created various companies such as Ztudium tech platform; founder of global digital platform directory openbusinesscouncil.org; digital transformation platform to empower, guide and index cities citiesabc.com and fashion technology platform fashionabc.org. He is also the publisher of intelligenthq.com, hedgethink.com and tradersdna.com. He has been working with the likes of UN / UNITAR, UNESCO, European Space Agency, Davos WEF, Philips, Saxo Bank, Mastercard, Barclays, and governments all over the world.

With over two decades of experience in international business, C-level positions, and digital transformation, Dinis has worked with new tech, cryptocurrencies, driven ICOs, regulation, compliance, and legal international processes, and has created a bank, and been involved in the inception of some of the top 100 digital currencies.

He creates and helps build ventures focused on global growth, 360 digital strategies, sustainable innovation, Blockchain, Fintech, AI and new emerging business models such as ICOs / tokenomics.

Dinis is the founder/CEO of ztudium that manages blocksdna / lifesdna. These products and platforms offer multiple AI P2P, fintech, blockchain, search engine and PaaS solutions in consumer wellness healthcare and life style with a global team of experts and universities.

He is the founder of coinsdna a new swiss regulated, Swiss based, institutional grade token and cryptocurrencies blockchain exchange. He is founder of DragonBloc a blockchain, AI, Fintech fund and co-founder of Freedomee project.

Dinis is the author of various books. He has published different books such “4IR AI Blockchain Fintech IoT Reinventing a Nation”, “How Businesses and Governments can Prosper with Fintech, Blockchain and AI?”, also the bigger case study and book (400 pages) “Blockchain, AI and Crypto Economics – The Next Tsunami?” last the “Tokenomics and ICOs – How to be good at the new digital world of finance / Crypto” was launched in 2018.

Some of the companies Dinis created or has been involved have reached over 1 USD billions in valuation. Dinis has advised and was responsible for some top financial organisations, 100 cryptocurrencies worldwide and Fortune 500 companies.

Dinis is involved as a strategist, board member and advisor with the payments, lifestyle, blockchain reward community app Glance technologies, for whom he built the blockchain messaging / payment / loyalty software Blockimpact, the seminal Hyperloop Transportations project, Kora, and blockchain cybersecurity Privus.

He is listed in various global fintech, blockchain, AI, social media industry top lists as an influencer in position top 10/20 within 100 rankings: such as Top People In Blockchain | Cointelegraph https://top.cointelegraph.com/ and https://cryptoweekly.co/100/ .

Between 2014 and 2015 he was involved in creating a fabbanking.com a digital bank between Asia and Africa as Chief Commercial Officer and Marketing Officer responsible for all legal, tech and business development. Between 2009 and 2010 he was the founder of one of the world first fintech, social trading platforms tradingfloor.com for Saxo Bank.

He is a shareholder of the fintech social money transfer app Moneymailme and math edutech gamification children’s app Gozoa.

He has been a lecturer at Copenhagen Business School, Groupe INSEEC/Monaco University and other leading world universities.